LiDPM: Rethinking Point Diffusion for Lidar Scene Completion

Tetiana Martyniuk Gilles Puy Alexandre Boulch Renaud Marlet Raoul de Charette

IEEE Intelligent Vehicles Symposium (IV 2025), 2025

Abstract

Training diffusion models that work directly on lidar points at the scale of outdoor scenes is challenging due to the difficulty of generating fine-grained details from white noise over a broad field of view. The latest works addressing scene completion with diffusion models tackle this problem by reformulating the original DDPM as a local diffusion process. It contrasts with the common practice of operating at the object level where vanilla DDPMs are used.

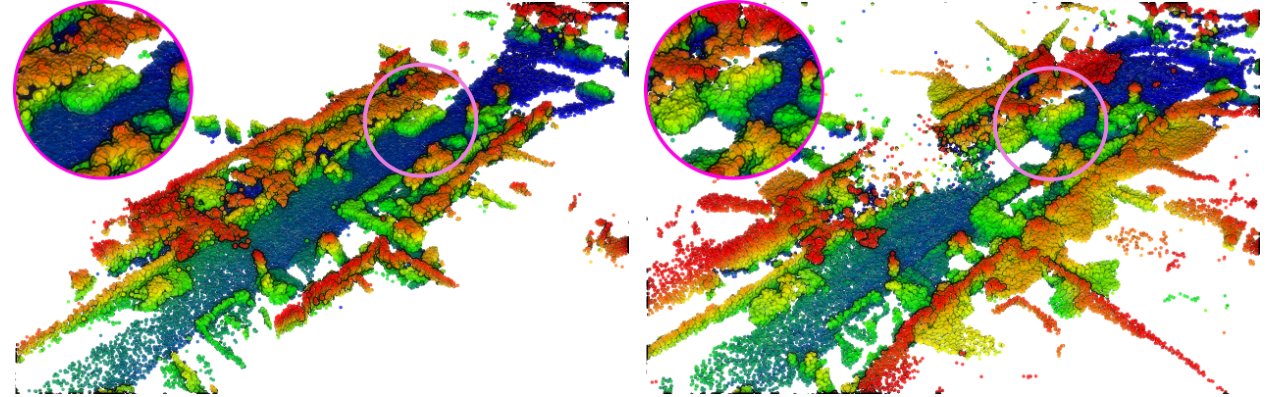

In this work, we close the gap between these two lines of work. We identify approximations in the local diffusion formulation, show that they are not required to operate at the scene level, and that a vanilla DDPM with a well-chosen starting point is enough for completion. Finally, we demonstrate that our method, LiDPM, leads to better results in scene completion on SemanticKITTI.