Principal Component Analysis: formalism

Linear algebra

- Base change: endomorphism

Principal Component Analysis: formalism

Linear algebra

- Base change: endomorphism

- Projection: linear application of

Principal Component Analysis

PCA geometric objective

PCA search for the sub vector space (with reduced dimensio) for projection which allow the more accurate projection of the data.

Principal Component Analysis: formalism

Statistics

Let

- Average

- Variance

- Covariance

Let

- Variance-Covariance matrix

Principal Component Analysis

PCA statistical objective

PCA aims at:

- Dispersion maximization on the first dimensions of the base:

- Dimensions are not correlated:

Principal Component Analysis: algorithm

Samples

- Center the sample

- Build the variance-covariance matrix

- Diagonalize

- Sort the eigenvalues in decreasing order (and eigenvectors)

Principal Component Analysis: properties

- Transfer matrix

- Projection matrix

Principal Component Analysis: properties

- The eigenvectors

sorted in increasing order - Variance

- The principal components (with a large variance) represent the signal

- Low variance components are the noise

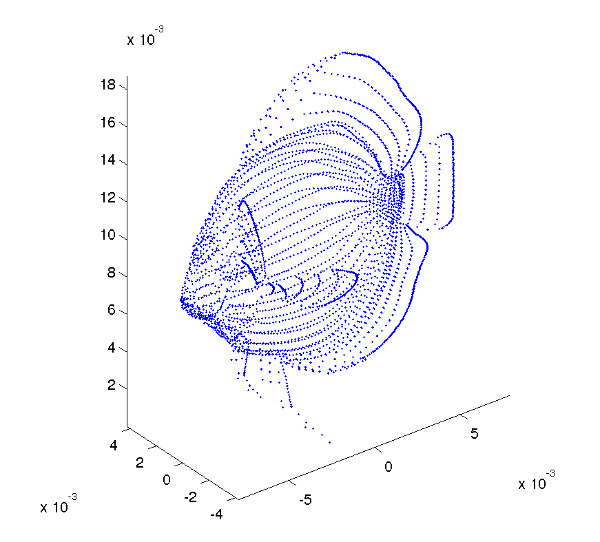

Principal Component Analysis: examples

Back to the fishes

Points of

Variances:

Eigen vector base:

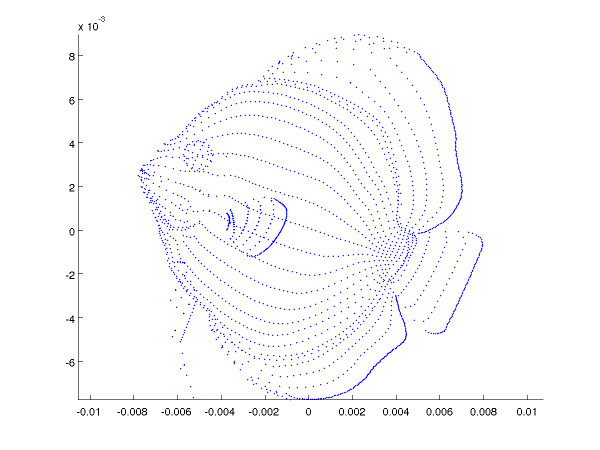

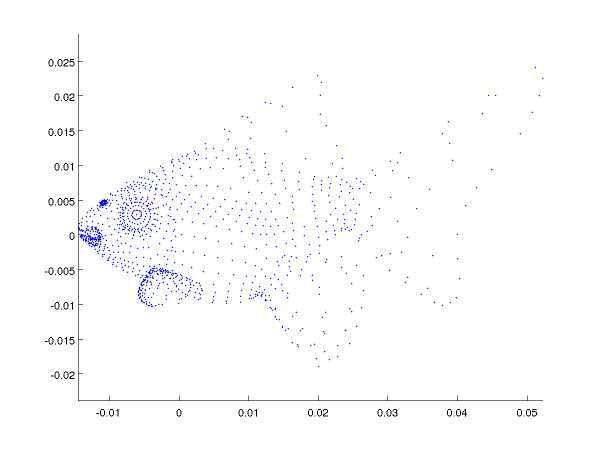

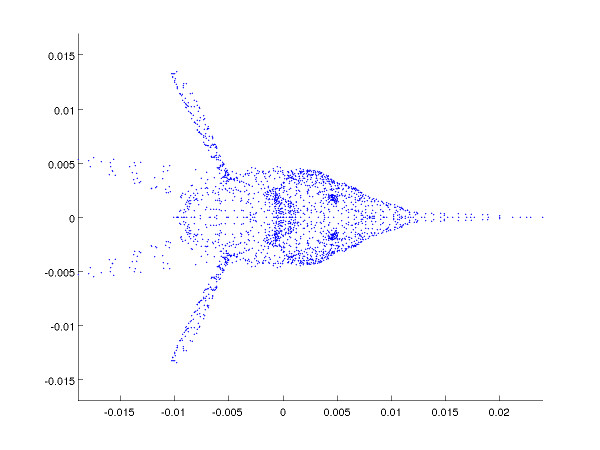

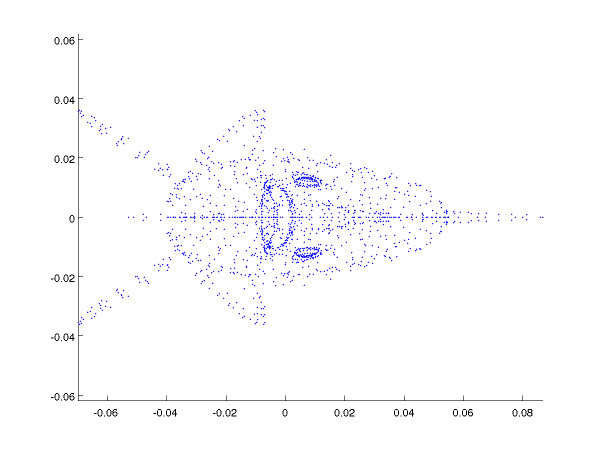

Principal Component Analysis: examples

Back to the fishes

Projection on the two first components (or the two last)

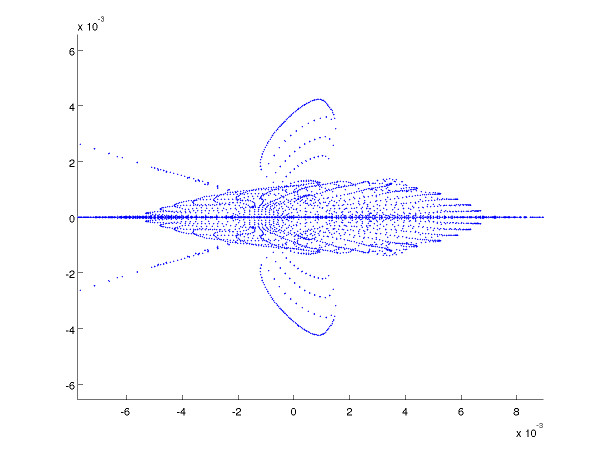

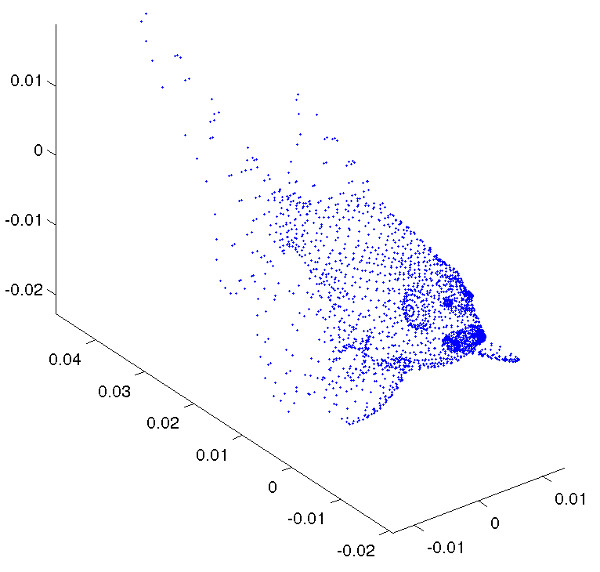

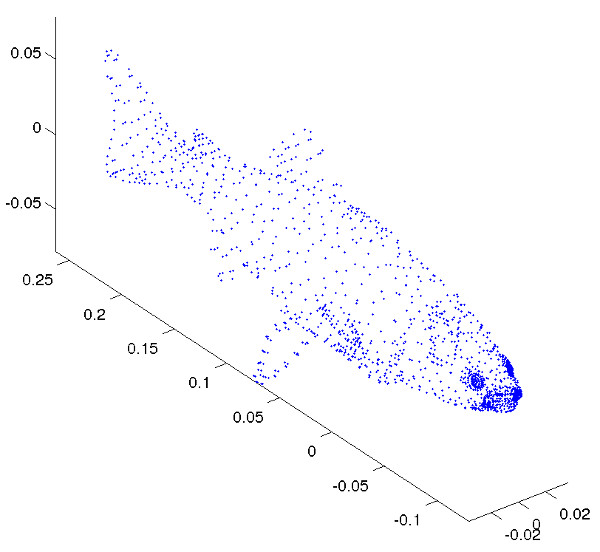

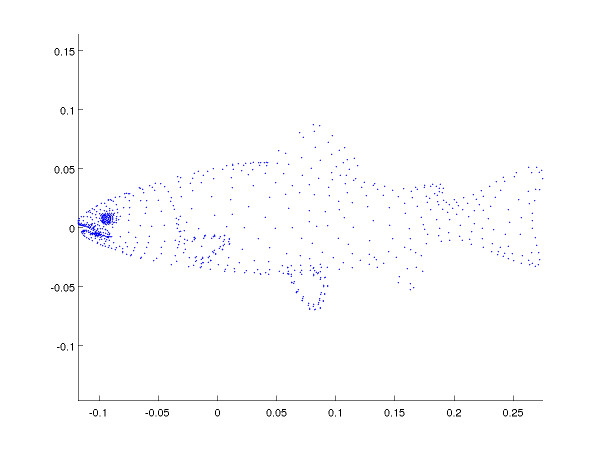

Principal Component Analysis: examples

More fishes

3D vs projection on the two first components (canonical representations) and the last.

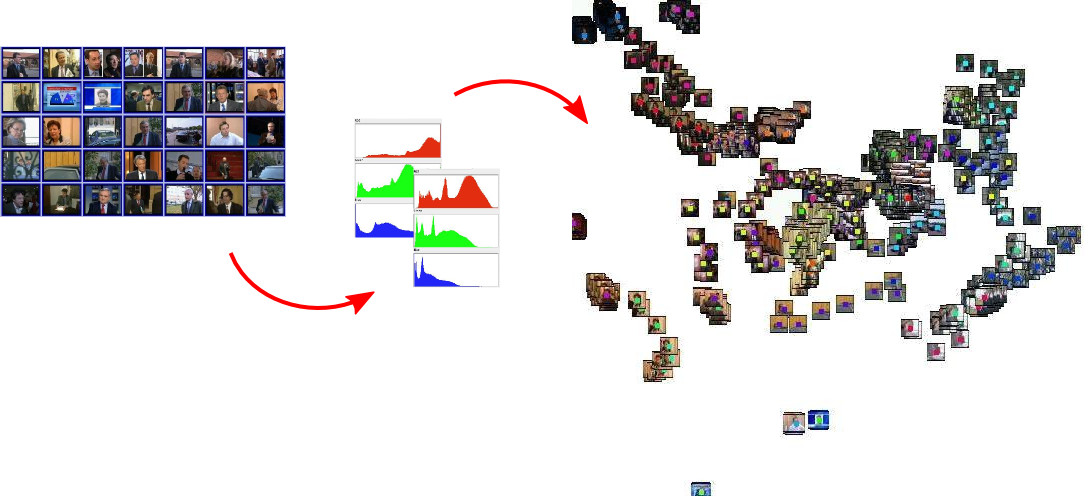

Principal Component Analysis: examples

Video analysis

Video images converted to color histrograms and visualized with the 2 first components of the PCA.

Principal Component Analysis

Key points

- High dimension data representation

- Dimension reduction

- Variable decorrelation

- Based on data variance-covariance matrix diagonalization

Usage

- Data preprocessing for data analysis (see the first classes)

- Visualization

Clustering

Clustering

Definition

- Find categories for close/similar objects

- unsupervised classification of the unlabeled data

Objectives

- Group similar data, requires a notion of distance

- Categorization

K-means

- Let

- A cluster (indexed by

- Let

- Let

K-means

Algorithm minimizes:

K-means

Algorithm

Initilize

- Assign each

- Recompute the

(average location of the group)

K-means

Properties

- There is a fixed number of cluster, so the algorithm converges

- But the solution may not be optimal (local minimum)

Initialization

- Initialization is important

- E.g., chose the initial

K-means

Statistical variant

- Parameters of Gaussian Mixture Model (GMM) estimated by the Expectation-Maximization algorithm.

- Estimate:

K-means

Variants and tricks

- Fuzzy C-means:

- Different distance: Mahalanobis distance (FCM), complete covariance matrix (GMM)

- Outliers: if

- Criteria for

Clustering: other approaches

Partitionnement spectral:

- Similarity matrix,

- Dimension reduction (first eigen

vectors) - K-means

Clustering: other approaches

DBSCAN

- Data partionning in categories of MinPts points in a radiusϵ

- Going through the data step by step to add in a category

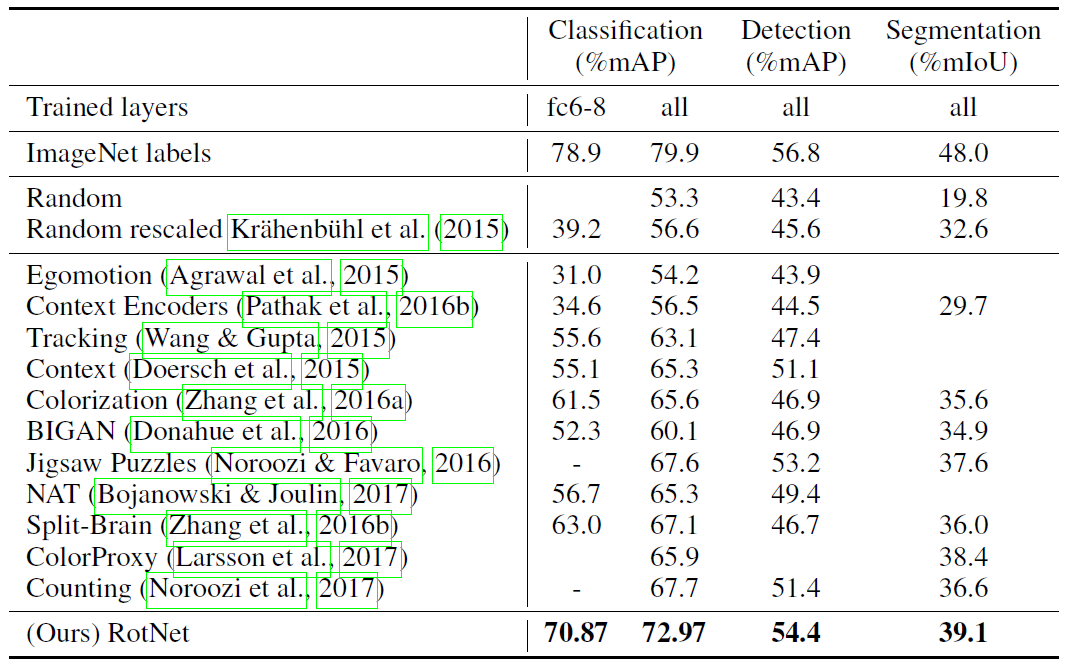

Self-supervised learning

Overview

- Principles

- Image-based transformations

- Contrastive approaches

- SimCLR

Self-supervised learning

Objective

Create a good features for a downstream task.

Pre-training of the network

Create a pretext task to train the network on.

The labels for task a generated automatically.

Downstream task

This the real final objective. It could be classification, regression...

It is trained in a supervised manner.

Image-based transformation

Key idea

To predict the transformation of an image, you must \textit{understand} what is in the image.

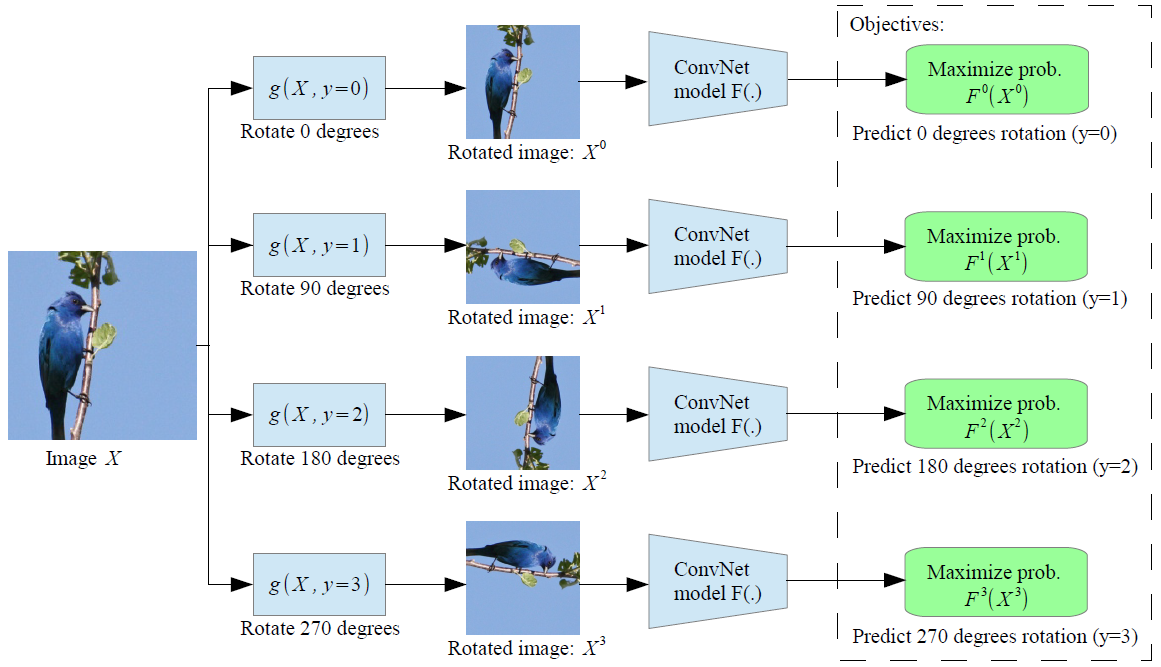

Image-based transformation: rotation

Transformation

Random rotation of the image.

Four classes:

Simple classification problem.

Image-based transformation: rotation

Transformation

Random rotation of the image.

Four classes:

Simple classification problem.

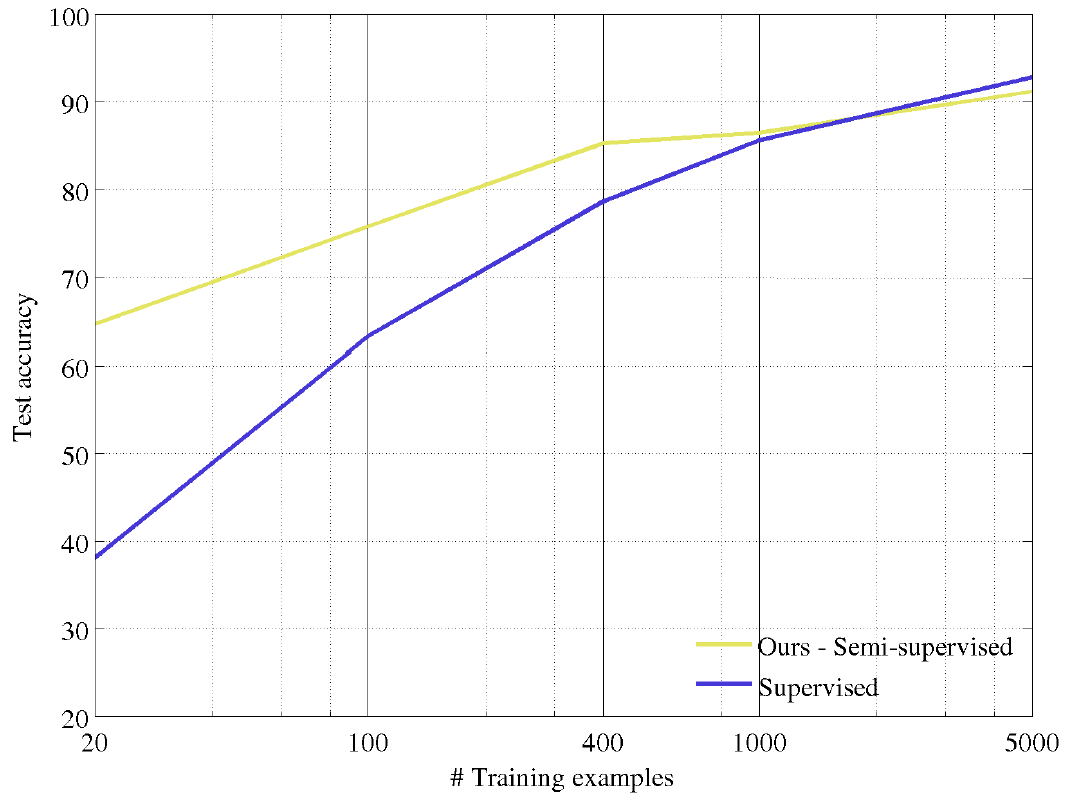

Semi-supervised learning

Pre-training: all data (no label)

Target: part of the data with labels

Image-based transformation: rotation

Transformation

Random rotation of the image.

Four classes:

Simple classification problem.

Semi-supervised learning

Pre-training: all data (no label)

Target: part of the data with labels

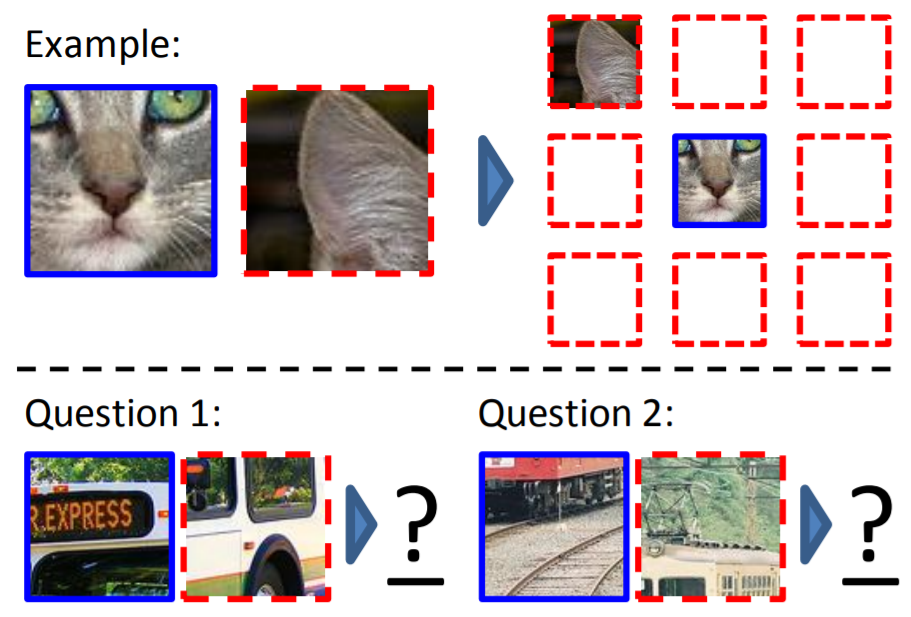

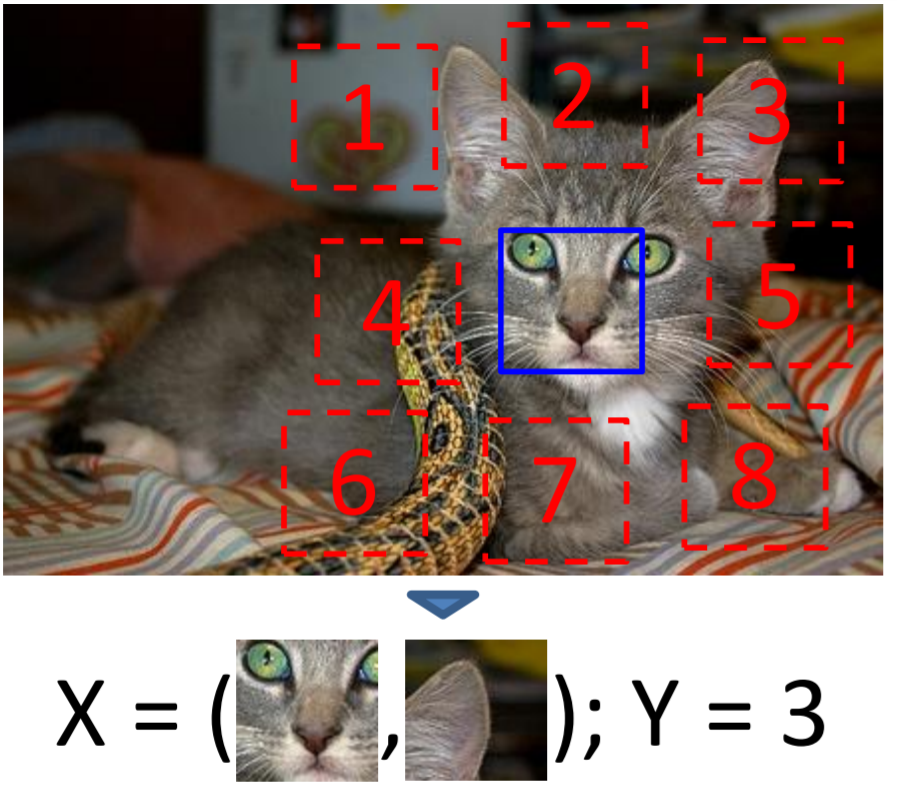

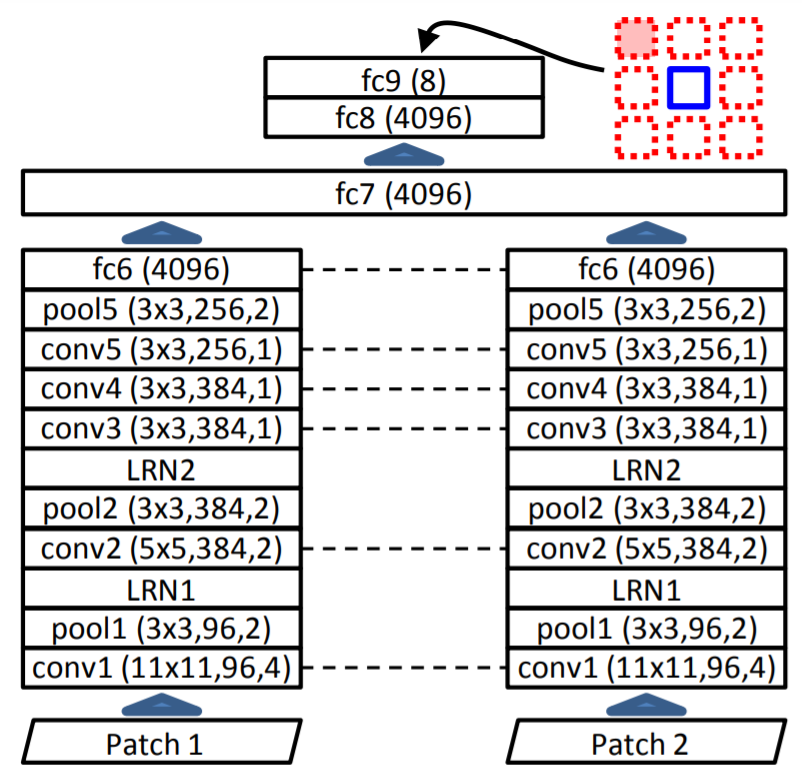

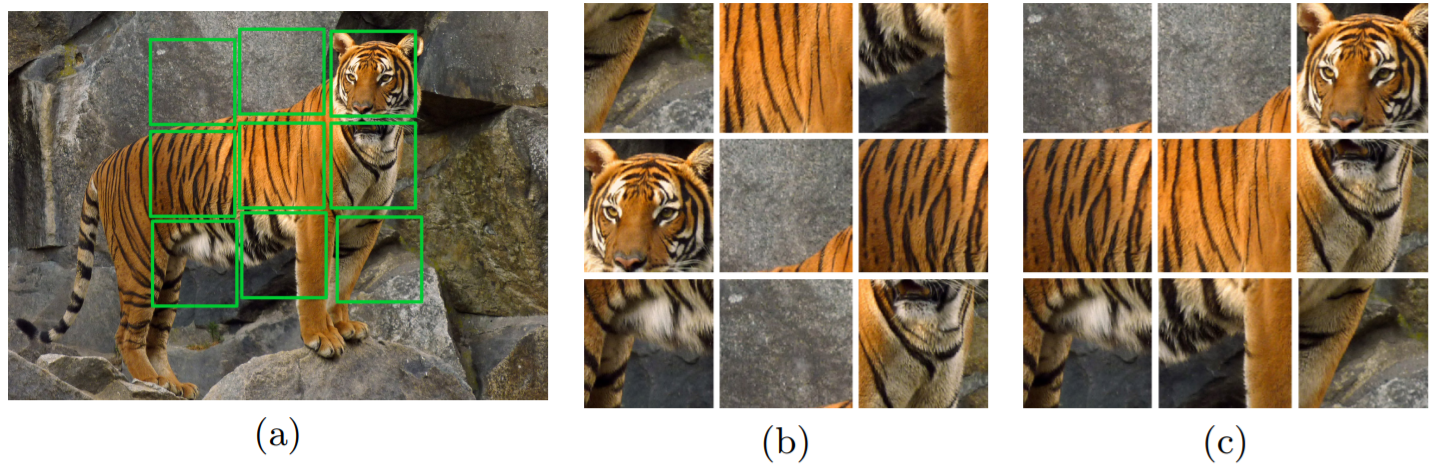

Image-based transformation: relative position

Transformation

Create a pair of patch, find their relative position.

To solve problem, you need to understand the object.

Image-based transformation: relative position

Transformation

Create a pair of patch, find their relative position.

To solve problem, you need to understand the object.

Problem

Classification with 8 classes

Image-based transformation: relative position

Transformation

Create a pair of patch, find their relative position.

To solve problem, you need to understand the object.

Problem

Classification with 8 classes

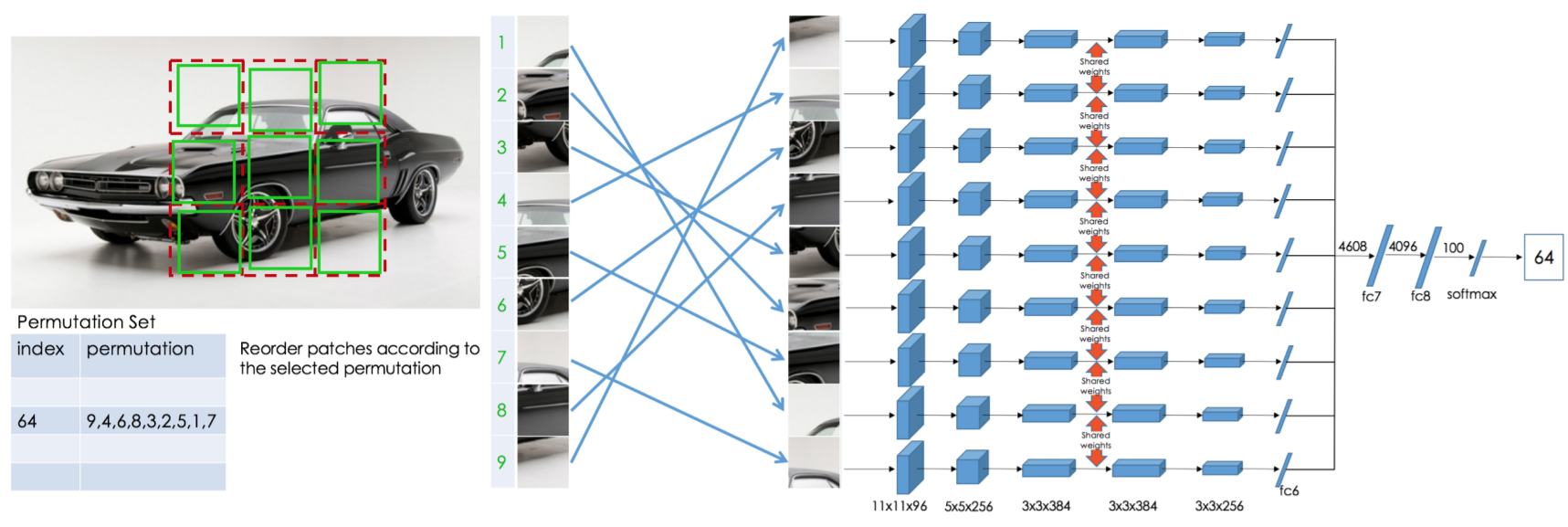

Image-based transformation: jigsaw puzzle}

Image-based transformation: jigsaw puzzle}

Contrastive methods

Image based methods

Predict a transformation of an image.

but may not require a complete knowledge of the object.

What properties the pre-trained network should have ?

- robust to image variation (illumination, deformation)

- disciminative with respect to different objects

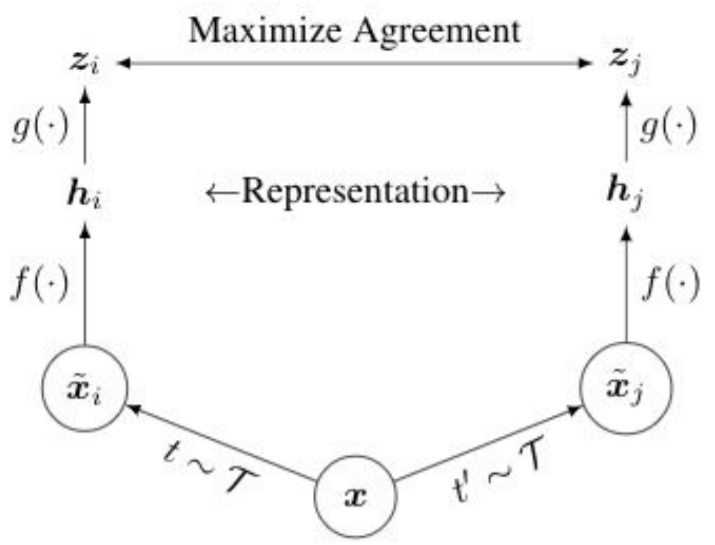

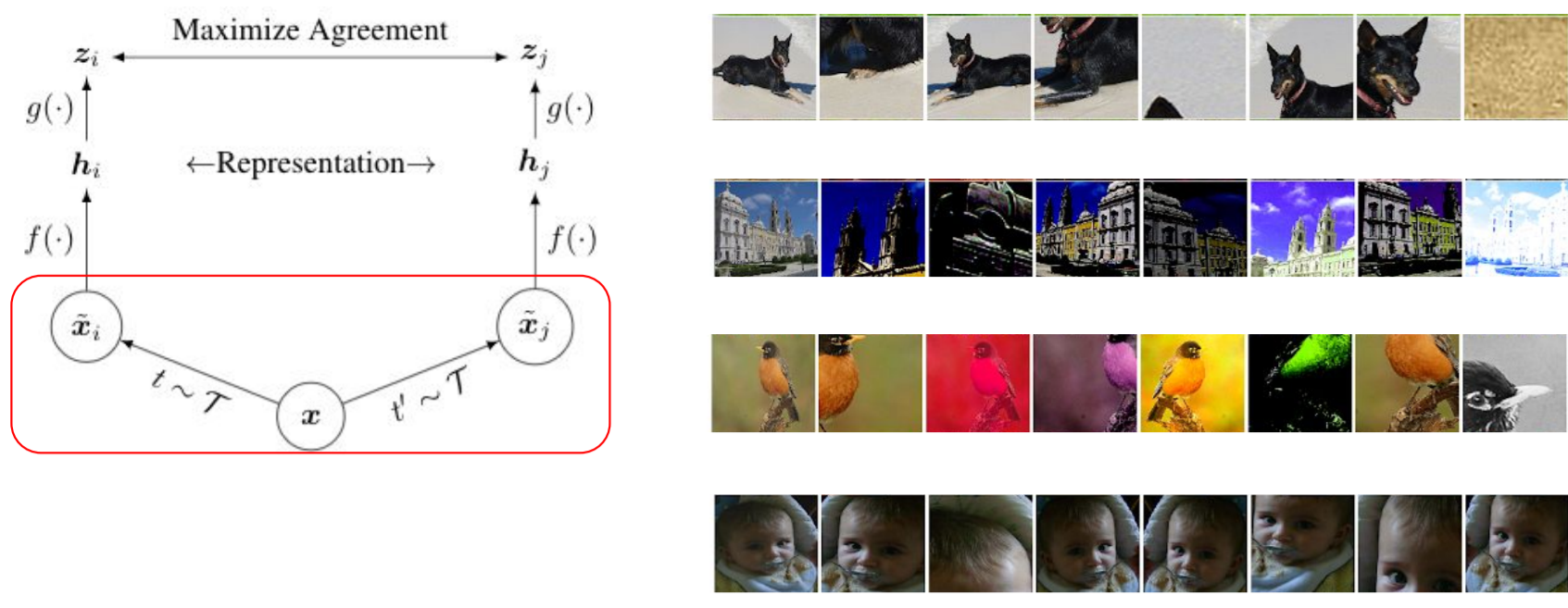

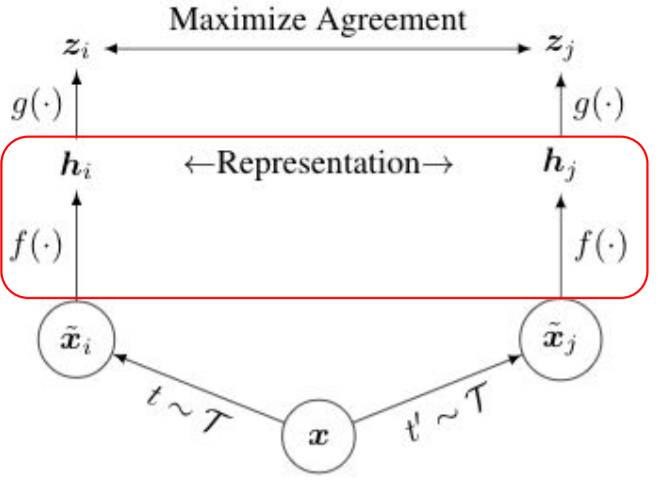

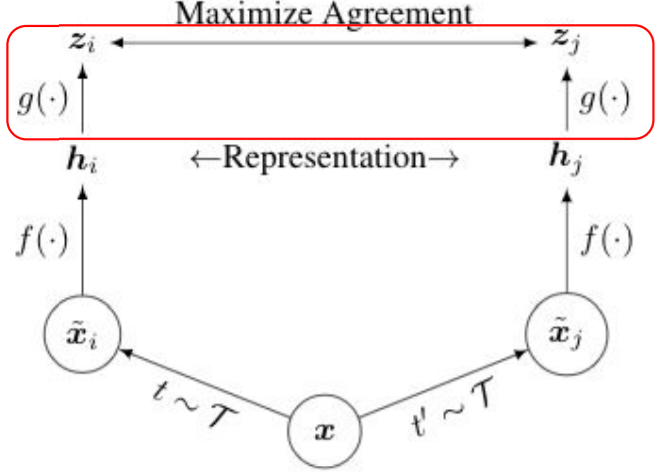

SimCLR

SimCLR

SimCLR

SimCLR

SimCLR

SimCL

Loss function

Let

The loss function is:

Analysis

A cross entropy applied label corresponding to the pair generated from

Conclusion

Unsupervised learning is one of the big thing in machine learning now.

• Can we extract better/general features?

• Can we reduce the training time?

• Do we really exploit all the information in the data?

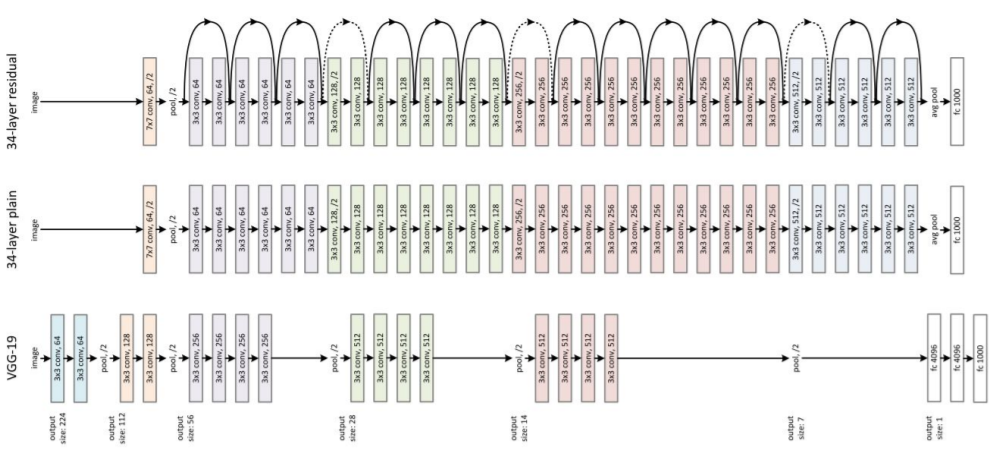

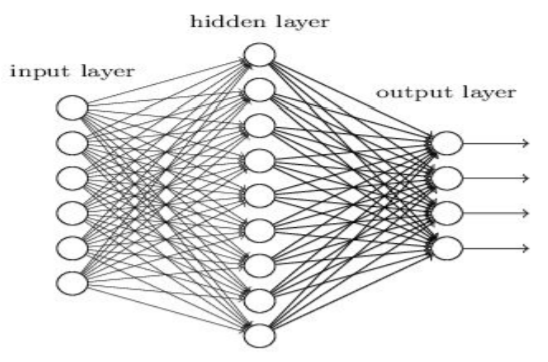

Neural Network notes