Motivation

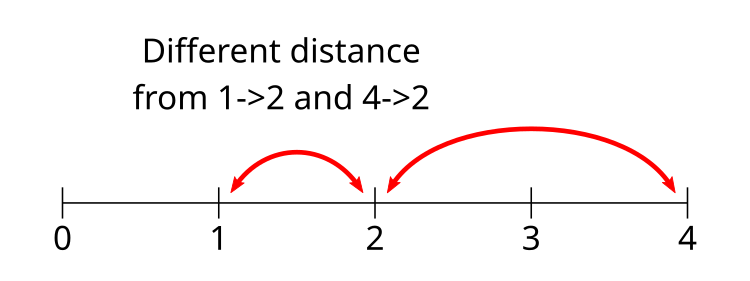

Simple problem:

Predict if Bob will like a movie given Alice's grade

Hypothesis:

A simple threshold

Motivation

Simple problem:

Predict if Bob will like a movie given Alice's and Carol's grades

Hypothesis:

An affine function

Motivation

More complicated problem:

Predict if Bob will like a movie given a large user database

Hypothesis:

An affine function

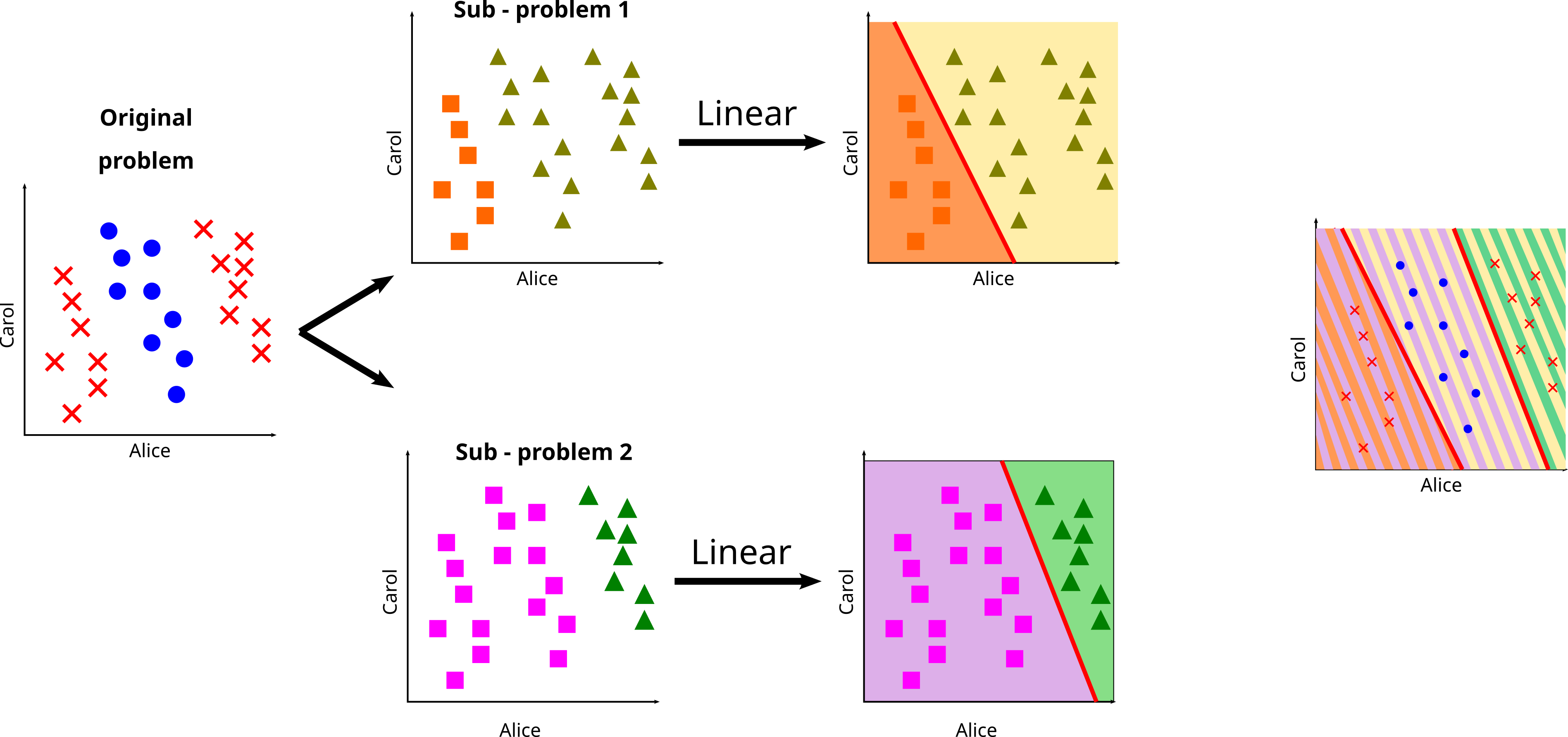

Motivation

More complicated problem:

Predict if Bob will like a movie given a large user database

Hypothesis:

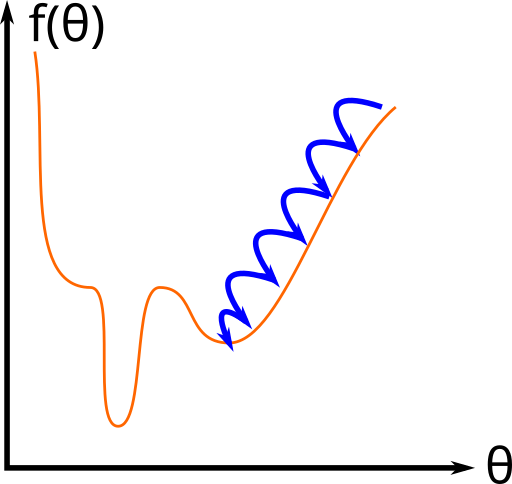

Non-affine function ?

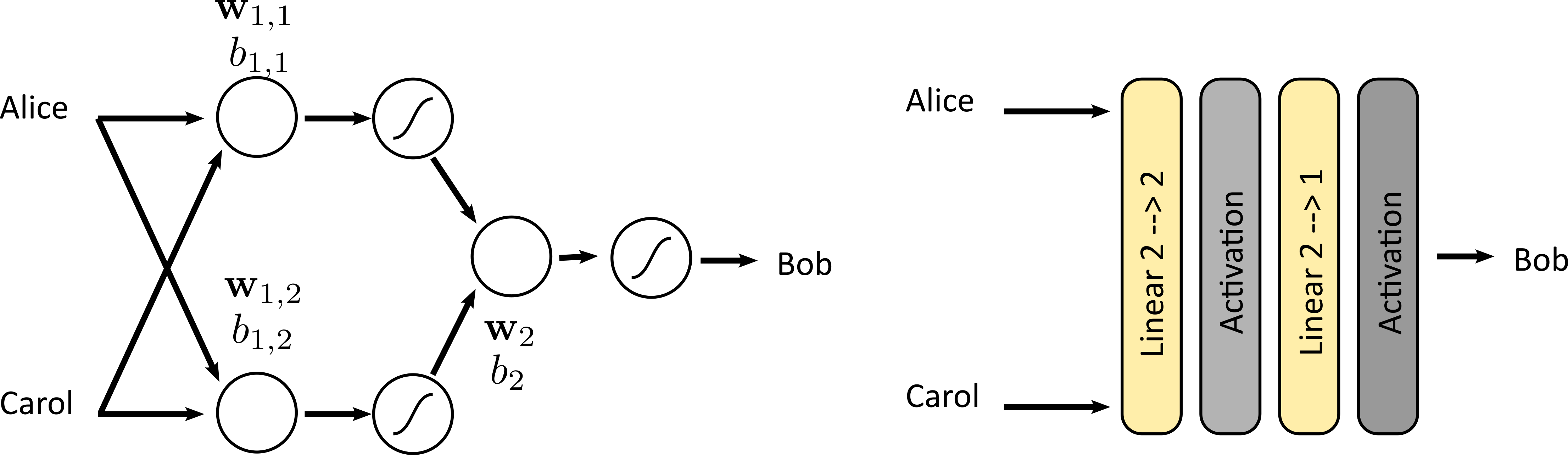

Motivation

Ideally

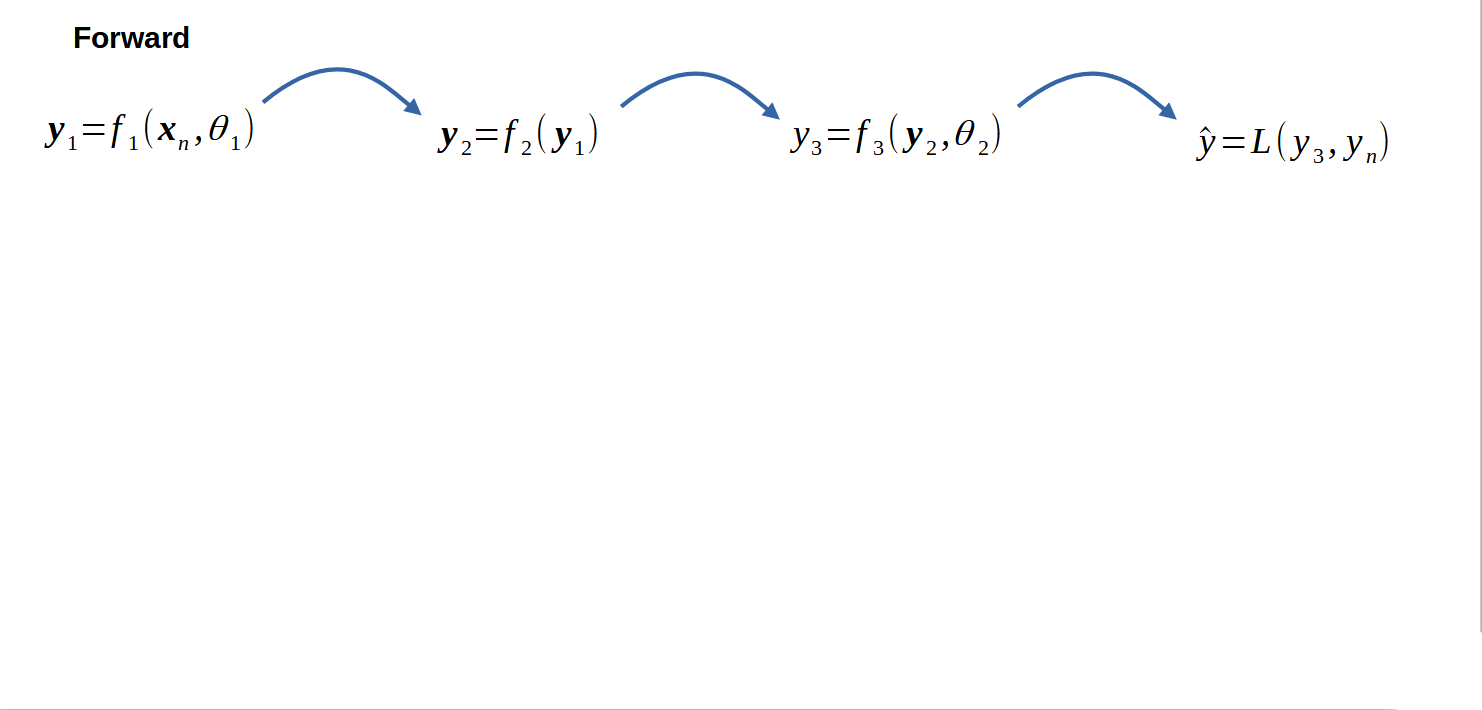

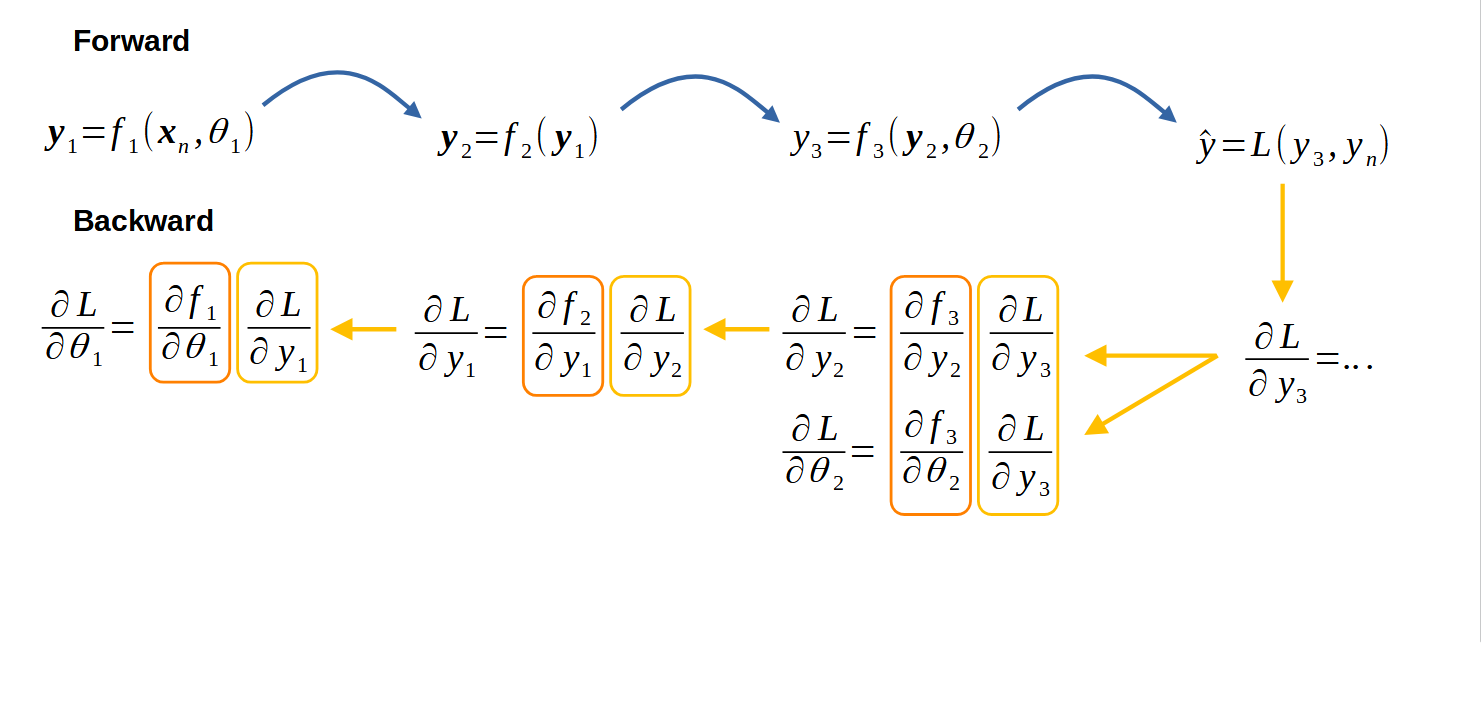

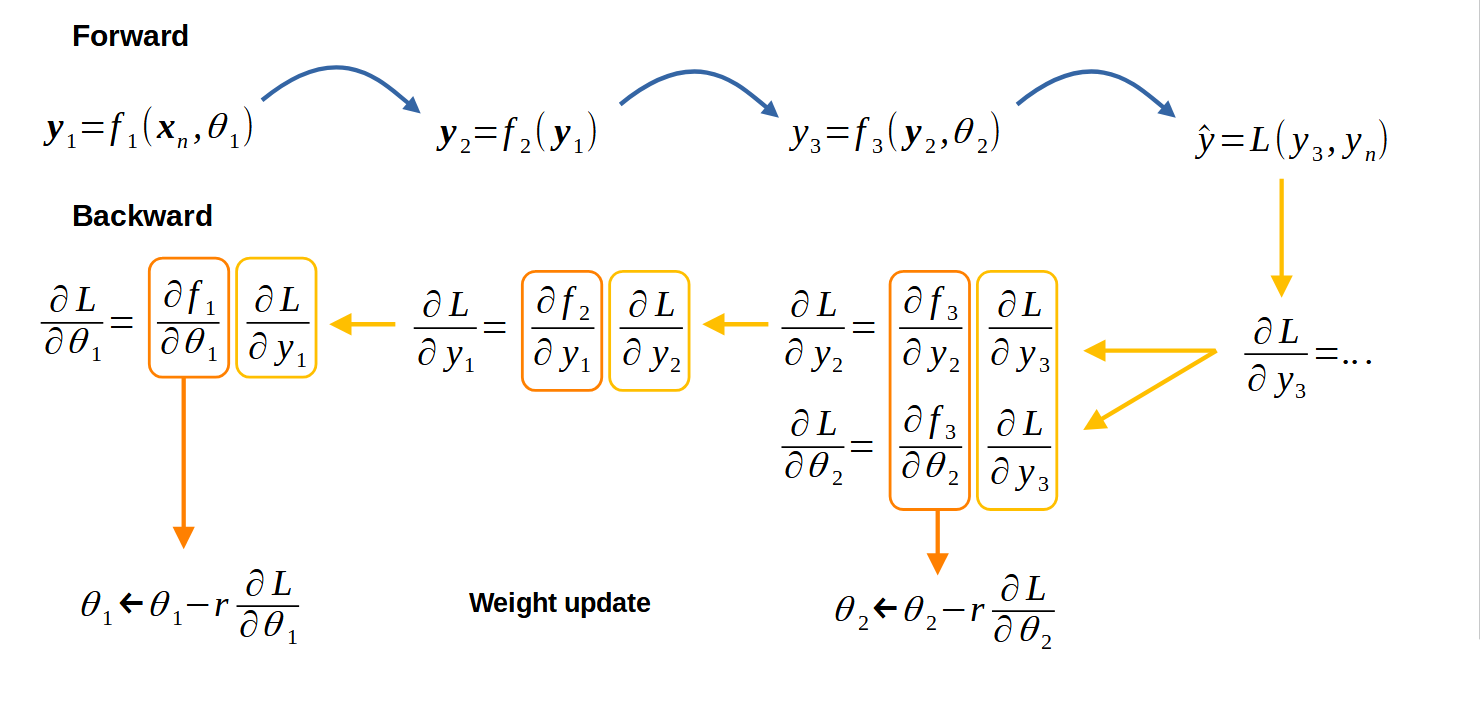

- General machine learning architectures / bricks

- use the same approach for various problems

- Learn the parameters

- Use data to automatically extractknowledge

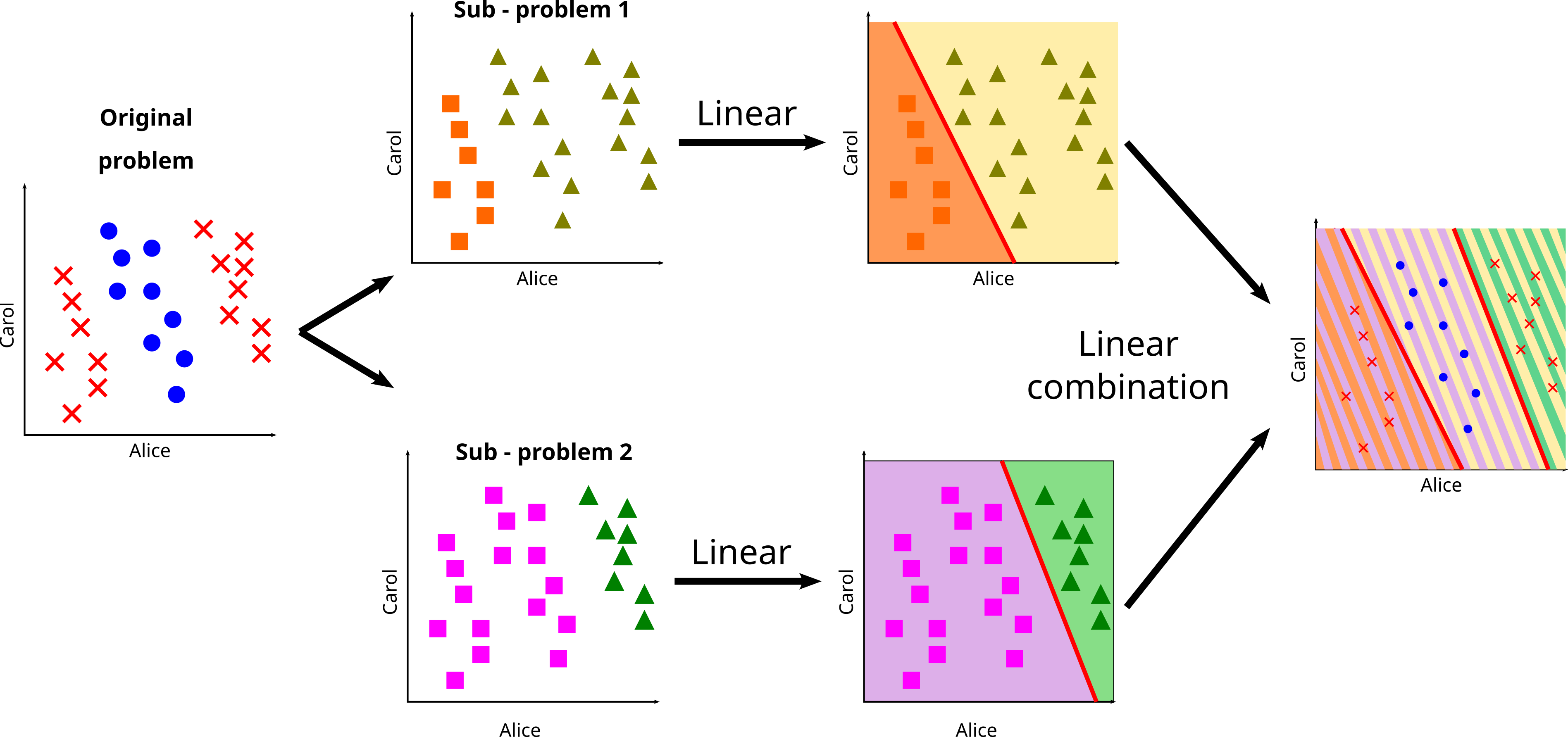

Neural networks

- Currently one of the most efficient approach for machine learning

Artificial neuron

Historical Background

- 1958: Rosenbalt, perceptron

- 1965: Ivakhenko and Lapa, neural networks with several layers

- 1975-1990: Backpropagation, Convolutional Neural Networks

- 2007+: Deep Learning era (see Deep Learning sesion)

- Large convolutional neural networks

- Transformers

- Generative models

- "Foundation models"

- ...

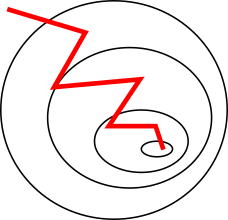

Bio inspired model

- The brain is made of neurons.

- Receive, process and transmit action potential.

- Multiple recievers (dentrites), single transmitter (axon)